I was playing around with LM Studio and some local LLMs, when I read about MCP servers. The idea struck me to integrate my Home Assistant and I found an MCP server plugin for that, which Home Assistant can expose. It seemed like a great idea to let my local AI talk straight to Home Assistant. The plan was simple – just point LM Studio at the MCP endpoint – but when I tried it, Home Assistant logged an error and the connection failed. So began a little journey into the following workaround.

Why the direct approach failed

When I simply pointed LM Studio at the Home Assistant MCP endpoint (https://home-assistant.example.com/mcp_server/sse), the log in Home Assistant started blowing up with a cryptic errors. It turns out that LM Studio’s MCP feature is built around streamable HTTP or stdio; it never negotiates an SSE connection, so the MCP plugin on Home Assistant gets confused. This medium.com post here explains the difference between SSE and stdio, if you’re interested.

I found a Github issue discussing this roughly, but there seems to be no solution and no further discussions (maybe I’m almost the only one with this issue, or no one uses LM Studio with Home Assistant MCP?). In any case, the only workaround is to make LM Studio think it’s talking to a plain STDIO process, and then have that process talk to Home Assistant over SSE.

Enter the MCP‑Proxy

The community has already built a tiny tool called mcp‑proxy that does exactly what we need:

- It connects to Home Assistant over SSE.

- It exposes a standard input/output stream that LM Studio can consume.

There are two ways to run it: as a Docker container or as a standalone binary. Below I’ll walk through both, showing the exact configuration you’ll paste into LM Studio’s mcp.json.

Either way you’ll need to install the Home Assistant MCP Plugin and generate a long-living access token from Home Assistant, which can be created in your user profile. The mcp-proxy can be found on sparfenyuk’s Github. You’ll find further configuration and documentation on there as well. I will only focus on the minimum subset you’ll need to get it running (which is probably enough anyway).

Option A – Docker Image

The official ghcr.io/sparfenyuk/mcp-proxy image ships without the optional uvx dependency that is necessary for execution (due to security apparently), so you’ll need to build a new image yourself.

# Dockerfile

FROM ghcr.io/sparfenyuk/mcp-proxy:latest

# Install the 'uv' package

RUN python3 -m ensurepip && pip install --no-cache-dir uv

ENV PATH="/usr/local/bin:$PATH" \

UV_PYTHON_PREFERENCE=only-system

ENTRYPOINT ["mcp-proxy"]

Build it locally:

docker build -t my-mcp-proxy-image .

Now, tell LM Studio to start this container on‑demand and hand it the Home Assistant SSE endpoint plus your token.

{

"mcpServers": {

"home-assistant": {

"command": "docker",

"args": [

"run", "-i", "--rm", "my-mcp-proxy-image",

"--headers", "Authorization",

"Bearer <YOUR_ACCESS_TOKEN>",

"https://home-assistant.example.com/mcp_server/sse",

"uvx mcp-server-fetch"

]

}

}

}

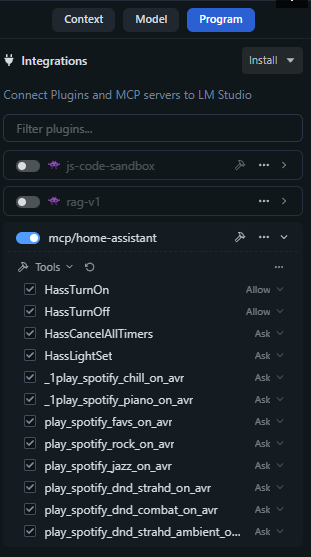

LM Studio will launch the container for every request you take and the proxy will connect to Home Assistant over SSE. The Integration tab should display your new MCP server like so:

Option B – Stand‑alone Binary

If you prefer not to run Docker, just install the tool directly on your host. Other ways of installing can be found on the official Github page, I will only highlight one way.

pip install uv uv tool install mcp-proxy # installs it into ~/.local/bin by default

Add ~/.local/bin (or wherever the binary lives) to your PATH and restart LM Studio to make sure it’s accessing the newly set environment variable.

Edit LM Studio’s mcp.json:

{

"mcpServers": {

"mcp-proxy": {

"command": "mcp-proxy",

"args": [

"https://home-assistant.example.com/mcp_server/sse"

],

"env": {

"API_ACCESS_TOKEN": "<YOUR_ACCESS_TOKEN>"

}

}

}

}

Now LM Studio will spawn mcp-proxy as a child process each time you need an MCP connection. The proxy immediately opens the SSE stream to Home Assistant, so everything stays local and fast. The integration tab in LM Studio will display the same integration as the screenshot in Option A shows.

Closing Thoughts

With the MCP‑Proxy in place I can now give LM Studio a natural language command like “turn on the living‑room light” and watch Home Assistant execute it instantly—no manual webhooks or REST calls required. The whole flow stays local: the proxy talks to Home Assistant over SSE, while LM Studio reads from and writes to its standard input and output streams. This makes the integration both fast and secure.

If in the future Home Assistant adds native support for streamable HTTP, or LM Studio learns how to negotiate SSE directly, this extra layer will no longer be necessary. Until then, the MCP‑Proxy offers a simple, reliable workaround that turns your local AI playground into a real‑world home‑automation controller.